Werden AR-Linsen jemals funktionieren?

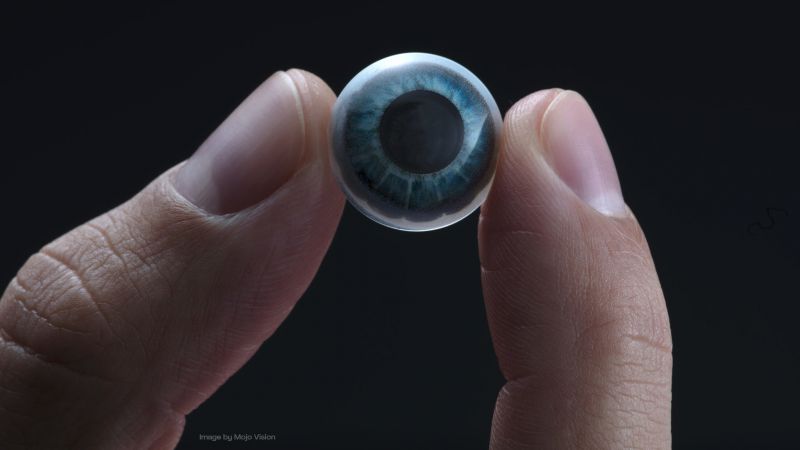

Mit der heutigen Technologie scheint alles möglich zu sein. Wie wäre es also mit Kontaktlinsen, die das, was wir sehen, anzeigen, überlagern und „erweitern“ können? Das sollte doch kein großes Problem sein, oder? Die ersten Dinge, die einem zu AR-Linsen einfallen, reichen wahrscheinlich von „verrückt“ über „cool“ bis hin zu „das ist physikalisch unmöglich! Und bis zu einem gewissen Grad sind sie alle wahr. Die ersten diesbezüglichen Patente gehen auf das Jahr 1999 zurück. Ein Prototyp mit einer einzigen LED wurde 2009 vom damaligen Erfinder von Google Glass vorgestellt. Und in den letzten zwei Jahren sind wir über Artikel über ein Unternehmen namens „Mojo Vision“ gestolpert, das bereits über 100 Millionen Dollar an Investorengeldern eingesammelt hat. In diesem Artikel wollen wir einen genaueren Blick auf die wichtigsten Herausforderungen werfen, die eine solche Technologie zunächst bewältigen und lösen muss, bevor man überhaupt an die Kommerzialisierung eines solchen Produkts denkt oder auf eine breite Kundenakzeptanz hofft.

Beantworten wir die Frage, indem wir mehr Fragen stellen

Seien Sie gewarnt: Dieser Artikel wird viele Fragen aufwerfen. Auf die meisten dieser Fragen gibt es heute einfach noch keine Antworten. Aber lassen Sie uns nicht verzweifeln, es ist ein äußerst faszinierendes und interessantes Thema und könnte einen riesigen Sprung für die Entwicklung von Hardware und Software im AR-Bereich bedeuten.

Die Herausforderungen für nützliche und funktionale AR-Kontaktlinsen ergeben sich aus einer Vielzahl von Themen:

- Optik: Wie kann das Bild überhaupt fokussiert werden? Wird das Display nicht die eigentliche Sicht versperren?

- Notwendige Komponenten: Tatsächliches Display, Stromversorgung, CPU und Speicher, Datenübertragung, Lösung der Wärmeabfuhr, ...

- Miniaturisierung: Wie lassen sich alle Komponenten in einem dünnen und notwendigerweise transparenten Gerät unterbringen?

- Augenverfolgung, beweglicher Referenzrahmen: Ein Display auf den Augen ist ein völlig anderes Konzept als jedes andere AR-Display.

- Einfache/Text-Augmentierung: Menschen lesen Text, indem sie ihre Augen bewegen - aber ein Kontaktlinsendisplay wird fixiert sein!

- „Echte“ Augmentierung: Für die Erkennung und Kommentierung von realen Welten und Objekten ist eine Kamera oder ein ähnlicher Sensor erforderlich.

- Interaktion: Wie können Nutzer das, was sie sehen, nur mit ihren Augen steuern/verändern?

- Stereoskopische Ansicht für beide Augen: Erfordert extreme Einschränkungen bei der Positionsgenauigkeit.

- Sicherheit: Schutz vor Helligkeit/Fehlfunktion, Toxizität von Materialien, Sauerstoffversorgung der Augen.

- Individualisierung: Erfordert individuell angepasste (Mikro-)Linsen.

- Langlebigkeit vs. Preis: Wie lange halten normale Kontaktlinsen? Müssen Nutzer von AR-Linsen alle paar Monate neue Linsen kaufen?

- Privatsphäre/Sicherheit: Bedenken hinsichtlich Spionage und Abhören, Datensicherheit bei drahtlosen Übertragungen.

Optik - Realistisch bleiben

Das offensichtlichste Problem beim Versuch, etwas wie ein Display auf einer Kontaktlinse zu fokussieren, ist, dass dies nicht möglich ist. Punkt. Ein Objekt, das sich auf der Oberfläche der Linse (oder sehr nahe daran) befindet, kann einfach nicht fokussiert werden und nicht einmal ein reales Bild erzeugen - nur ein virtuelles. Außerdem kann das menschliche Auge selbst nichts fokussieren, was näher als etwa 10 cm ist, und es ist nicht in der Lage, weiter entfernte Objekte gleichzeitig scharf zu stellen. Daher benötigt jedes Pixel (oder jeder kleine Satz von Pixeln) einer solchen Anzeige eine genau abgestimmte und positionierte Mikrolinse oder Mikrolinsenanordnung, um den optischen Pfad zu korrigieren und ein fokussiertes Bild auf der Netzhaut zu ermöglichen.

Bei diesen Größenordnungen (= ca. 1 mm von der Hornhaut entfernt) können jedoch selbst kleine Abweichungen von dieser Ausrichtung (wie z. B. der Abstand zwischen Kontaktlinse und Hornhaut aufgrund unterschiedlicher Mengen an Tränenflüssigkeit, lockerem Sitz, Augenbewegungen usw.) schnell zu einem verschwommenen Bild auf der Netzhaut sowie zu Verzerrungen der wahrgenommenen Entfernung und Größe des Bildes führen. Kurz gesagt, das ist nicht gut.

Das nächste Problem ist die Verdeckung der realen Welt durch etwas, das sich im optischen Pfad befindet: Kein Licht aus der realen Welt wird die Netzhaut erreichen, wenn es durch das Display und seine Mikrolinsen-Arrays blockiert ist! Im Idealfall (d. h. wenn sich das verdeckende Objekt auf oder sehr nahe an der Hauptebene der Linse befindet) wäre das Ergebnis lediglich eine leichte Verringerung der Helligkeit - in der Realität ist ein Display in einer Kontaktlinse jedoch so weit von der Mitte der Linse entfernt, dass es einen erheblichen Teil (etwa 30-40 %) der Brennweite des Auges ausmacht. Alles, was sich im Strahlengang befindet, führt also zu sichtbaren Artefakten wie Beugungsstreifen, örtlicher Helligkeitsabnahme usw. (insbesondere bei sehr kleinen, tageslichtangepassten Pupillen), Unschärfe scharfer Konturen sowie auffälligen Licht- und Schattenstreifen (probieren Sie es selbst aus: Blinzeln Sie die Augen und schauen Sie durch Ihre Wimpern). Dies ist besonders nachteilig, wenn das Display auf der optischen Achse platziert ist und auf die Fovea, den Bereich des schärfsten Sehens, gerichtet ist.

Und was ist mit dem Ort, an dem das Bild tatsächlich gesehen wird, und wie groß es sein wird? Das Mikrolinsen-Array muss die Pixel präzise „verteilen“, anstatt sie alle auf denselben mikroskopischen Punkt auf der Netzhaut zu fokussieren, was zu einem „Bild“ ohne Dimension führt. Auch im peripheren Bereich wird es keine Vergrößerung geben, es sei denn, die Mikrolinsen „projizieren“ einige Pixel mit geringerer Auflösung in einem Winkel in das Auge.

Erforderliche Komponenten

Was ist mit all den notwendigen Hardware-Komponenten, die entweder in die Kontaktlinse selbst eingebettet werden müssen oder auf einem externen, zusätzlichen Gerät, das der Benutzer in der Nähe seiner Augen tragen muss? Für eine autonome und eigenständige eingebettete AR-Kontaktlinse bräuchte man eine Menge Komponenten - im Grunde eine komplette Computereinrichtung:

- Das eigentliche Display, einschließlich der Mikrolinsenanordnung

- Stromversorgung/Speicherung

- ARM-Typ-CPU

- Speicher

- Peripherie für Datenübertragung, Internet

- Augenverfolgungssystem (Kreisel/Beschleunigungsmesser)

- Real-World-Tracking-System (Kamera)

- Interaktion (Gestenerkennung)

Und ja, wir reden immer noch über etwas so Kleines wie eine Kontaktlinse. Erinnern Sie sich daran, wie die Leute sagen, es sei unglaublich, wie viel Technologie in einem modernen Smartphone steckt? Vergleichen Sie das mit etwas, das so klein ist, dass es auf Ihre Fingerspitze passt, und sagen Sie uns dann, wie wahrscheinlich Ihnen das erscheint.

Miniaturisierung. Dinge wirklich, wirklich klein machen.

Wenn wir schon beim Thema kleine Objekte sind, machen wir es doch gleich noch komplizierter: Wie bekommen wir viele Komponenten in ein transparentes Objekt? Man braucht einen noch kleineren Formfaktor, als er in den heutigen Smartphones und der Mikroelektronik enthalten ist.

Einige Teile können wahrscheinlich hinter einer künstlichen Iris versteckt werden - aber das wird Ihre Nachtsicht (wenn die Pupillen groß sind) und Ihre Fähigkeit, in einer dunklen Umgebung zu sehen, beeinträchtigen. Aber andererseits ist das wahrscheinlich einer der Gründe, warum Sie AR-Linsen überhaupt tragen, um Ihre Nachtsicht zu verbessern... Wie sieht es mit der Wärmeableitung/-absorption aus? Sie wollen sicher nicht, dass etwas Ihr Auge berührt, das sich auf über 42 °C erwärmt. Niemals. Es müssen also Sicherheitsmaßnahmen ergriffen werden, insbesondere im Hinblick auf mögliche Fehlfunktionen der Geräte. Auch wenn alle Komponenten wenig Strom verbrauchen, wird dennoch Wärme erzeugt. Wie wird diese Wärme abgeleitet? Reichen Wärmestrahlung und einfacher Wärmeaustausch mit Tränenflüssigkeit aus? Das sollte jemand herausfinden, bevor sich die Leute die Augen verbrennen.

Für eine nicht eingebettete Version (d. h. ein oder mehrere externe Geräte, die die Benutzerakzeptanz sofort verringern) muss außerdem alles drahtlos übertragen werden: Strom per Induktion (in der Nähe der Augen und des Gehirns, es sei denn, die Batterie ist in das Objektiv eingebettet), Daten per drahtloser Übertragung (in der Nähe der Augen und des Gehirns), sogar ein vollständiger Bildschirm-Feed/Videostream („Fernanzeige“).

Eye Tracking, Beweglicher Referenzrahmen

Das gesamte Paradigma, wie wir etwas betrachten und wie ein solches Bild dargestellt werden muss, unterscheidet sich völlig zwischen einem Bild auf einer Kontaktlinse und jedem anderen bestehenden Anzeigesystem. Bei Fotografien, Monitoren - eigentlich bei allem in der realen Welt und sogar bei Head-Mounted Displays für VR oder AR - sind die Bilder im Wesentlichen statisch, und es sind die Augen, die sich bewegen und das Bild „scannen“ müssen, um eine klare Sicht auf das Dargestellte zu erhalten. Beachten Sie, dass sich das Bild bei HMDs drehen und mit dem Kopf mitbewegen muss. Die Kopfbewegungen sind jedoch wesentlich langsamer als die Augenbewegungen, und die Notwendigkeit dieser Bildbewegungen ergibt sich aus der „Umwandlung“ der Bildausrichtung von einem kopfbezogenen Referenzsystem in ein „globales“ statisches Referenzsystem.

Ein Bild, das auf einer Kontaktlinse angezeigt wird, muss sich auf jede einzelne Augenbewegung einstellen - und diese Augenbewegungen, die so genannten „Sakkaden“, sind für das Sehen unerlässlich. Sakkaden kommen sehr häufig vor (mehrere Male pro Sekunde) und sind extrem schnell (mehrere hundert Grad pro Sekunde). Die Bilddarstellung erfolgt per definitionem in einem augenrelativen Bezugssystem anstelle des globalen Bezugssystems der realen Welt. AR-Kontaktlinsen-Prototypen, wie sie auf der CES 2020 vorgestellt werden, kennen diesen wesentlichen Unterschied derzeit nicht, da sie vor das Auge gehalten werden und daher noch im realen Bezugssystem existieren. Technisch gesehen stellen sie keine echte AR-Kontaktlinse dar, die mit unserem tatsächlichen Verhalten und Leben kompatibel ist.

Es gibt jedoch zwei physiologische Effekte, die sich ein solches Anzeigegerät zunutze machen kann: zum einen die Tatsache, dass immer dieselben Pixel denselben Bereich auf der Netzhaut abdecken (unter der Annahme, dass sich die Kontaktlinse nur innerhalb weniger Mikrometer bewegt - was eigentlich unrealistisch ist). Dies kann genutzt werden, um die Display-Hardware für eine echte „foveated rendering“ zu entwerfen, was bedeutet, dass die Pixeldichte für die fovea centralis viel höher sein kann und für das periphere Sehen kontinuierlich um mehrere Größenordnungen verringert werden kann.

Der andere Effekt wird als „sakkadische Maskierung“ bezeichnet, die das Gehirn daran hindert, visuelle Eingaben während und am Ende jeder Augenbewegung zu verarbeiten. Dies kann dem Verfolgungssystem und dem Bildprozessor zusätzliche Zeit verschaffen, bevor es tatsächlich ein aktualisiertes und korrekt ausgerichtetes Bild für jede neue Augenposition anzeigen muss. Unser Gehirn ist schnell, aber es ist nicht so schnell. In diesem Fall kann die Technologie davon profitieren.

Nichtsdestotrotz ist ein Eye-Tracking-System ein absolutes Must-have. Ohne es sind AR-Kontaktlinsen praktisch nutzlos. Derzeit sind externe Eye-Tracking-Systeme in der Regel umständlich, da sie eine Kombination aus einer oder zwei Kameras, mehreren Nahinfrarot-LEDs und einer schnellen Bildverarbeitung verwenden, die die relativen Positionen der Pupille und der LED-Reflexionen erfasst. Für ein eingebettetes Augenverfolgungssystem ist es im Wesentlichen ausreichend, die Drehung des Auges um jede Achse zu messen. Hierfür können Trägheitssensoren verwendet werden, und die aktuellen MEMS-Gyroskope sind möglicherweise ausreichend klein (und schnell genug) für diese Aufgabe. Allerdings sind alle Trägheitssensoren anfällig für Integrationsdrift, so dass es ohne zusätzliche Sensoren schwierig sein wird, die absolute Ausrichtung des Auges immer ausreichend zu reproduzieren.

Selbst bei einer präzisen Nachführung sind kleine, unvermeidliche Bewegungen der Kontaktlinse gegenüber dem Auge (insbesondere aufgrund von Sakkaden mit hoher Geschwindigkeit) unvermeidlich. Klassische Kontaktlinsen, die ein translatorisches bifokales Design verwenden, bauen auf dieser Tatsache auf. Diese Bewegungen müssen irgendwie kompensiert werden, sonst verschiebt sich das wahrgenommene Bild ständig, und es kann sogar unscharf werden. Wenn wir auf die Informationen angewiesen sind, die wir zu sehen versuchen, kann das nicht nur lästig, sondern auch potenziell gefährlich sein.

Simple/Text Augmentation. Klingt einfacher als es ist.

Das ganze Prinzip des Lesens beruht auf schnellen Augenbewegungen, den „Sakkaden“. Bei der Betrachtung eines beliebigen Textes sind immer nur sehr wenige Buchstaben (geschweige denn Wörter) im Fokus, da alles, was zu weit außerhalb der optischen Achse liegt, nicht mehr auf die Fovea centralis projiziert wird. Es können also immer nur ein oder zwei Wörter gleichzeitig gelesen werden. Sie glauben es nicht? Probieren Sie es aus: Sehen Sie sich ein beliebiges Wort in diesem Artikel an und versuchen Sie, benachbarte Wörter oder die Wörter darüber und darunter zu lesen, ohne Ihre Augen zu bewegen. Sie werden überrascht sein, wie eingeschränkt Ihre Sicht ist!

Und hier kommt wieder das Problem eines Referenzsystems ins Spiel: In der realen Welt ist Text statisch, und wir lesen ihn, indem wir schnell zum nächsten Wort oder zur nächsten Buchstabengruppe gehen, ohne es überhaupt zu merken. Wenn wir aber Textinformationen in der Kontaktlinse anzeigen wollen, bewegt sich der Text mit dem Auge mit. Wir können einfach keine Sakkaden verwenden, um andere Teile der angezeigten Informationen zu „sehen“ - es sei denn, die Augenverfolgung aktualisiert die Position dieser Informationen, wodurch sie in der realen Welt wieder statisch werden (im Gegensatz zu „immer an der gleichen Stelle, egal wohin wir schauen“). Klingt kompliziert? Das ist es auch. Wie soll das Objektiv unterscheiden, ob es den virtuellen Text/das angezeigte Teil verschieben soll (weil es ein Objekt kommentiert) und wann es ihn relativ zum Auge an Ort und Stelle belassen soll (in diesem Fall sind Sie auf zwei oder drei Wörter beschränkt)?

„Echte“ Augmentation

Schließlich erfordert jede Form der „echten“ Erweiterung des Gesehenen (z. B. Hervorhebung bestimmter Objekte in der realen Welt, Kommentierung oder Erkennung stehender und sich bewegender Objekte, Überlagerung von Straßenschildern mit Sprachübersetzung usw.) sensorische Informationen aus der Umgebung. In der Regel geschieht dies mit einer RGB- oder Nahinfrarotkamera, während spezialisierte Sensoren Tiefensensoren, Laserentfernungsmesser, Ultraschall oder Radar verwenden können. Auch hier müsste ein solcher Eingabesensor in die Linse selbst integriert werden - andernfalls müsste der Benutzer ein zusätzliches externes Gerät tragen.

Und wenn es sich um ein externes Gerät handelt, ist der Sensor monoskopisch, und - was noch wichtiger ist - seine Position und sein Sichtfeld unterscheiden sich von dem, was jede Kontaktlinse „sieht“. Dies muss kompensiert werden, damit die Vergrößerung mit dem übereinstimmt, was das Auge tatsächlich sieht. Ein weiteres großes Problem besteht darin, dass es bei einem so genannten „optisch durchsichtigen“ AR-System (im Gegensatz zu einem „kameradurchsichtigen“) nur möglich ist, reale Dinge mit etwas Hellerem zu überlagern, aber nicht die Sichtbarkeit bestimmter realer Objekte zu verringern. Infolgedessen leidet jede Augmentation unter dem typischen „durchscheinenden“, geisterhaften Erscheinungsbild, das alle AR-Brillen mit optischer Durchsicht haben. Sie brauchen ein Beispiel? Bei der Übersetzung von Straßenschildern ist es nicht wirklich möglich, den Originaltext zu ersetzen oder gar zu entfernen - man kann nur angezeigte Inhalte hinzufügen, und auch nur dann, wenn diese heller sind als das tatsächliche reale Objekt. Darüber hinaus ist für die Verdeckung virtueller Objekte und die Tiefenwahrnehmung ein echter Tiefensensor erforderlich - ein einfacher Kameraeingangssensor reicht in der Regel nicht aus.

Interaktion: Wie Sie mit Ihrer AR-Linse kommunizieren

Wie werden Sie kontrollieren, was angezeigt wird? Wie werden Sie mit ihr interagieren? Wie werden Sie Menüs und Funktionen auswählen? Wenn Sie nur Ihre Augen benutzen, beschränken sich Ihre Möglichkeiten im Wesentlichen auf das Anstarren und Blinzeln, und das System kann nicht unterscheiden zwischen „Ich schaue nur länger auf diesen Teil des Bildes“ und „Ich möchte eine Schaltfläche oder eine Menüauswahl auslösen“ - ganz zu schweigen von „Ich schaue jetzt tatsächlich auf ein reales Objekt und möchte überhaupt keine Menüinteraktion auslösen“.

Also braucht man wieder ein externes Gadget oder Handgerät (was umständlich ist), oder Handgesten (die einen weiteren Bewegungssensor erfordern, der integriert werden muss), oder völlig neue Interaktionsmetaphern, die nur für die Augen gelten und die der Nutzer erst lernen muss. Und wie die Erfahrung der Vergangenheit zeigt, hat die Sprachsteuerung eine sehr geringe Akzeptanz bei den Nutzern (man denke an Google Glass in der Öffentlichkeit) und verhindert, dass man seine AR-Brille in vielen Situationen, in denen es ruhig sein soll (Geschäftsbesprechungen, Kino, Kirche, ...), tatsächlich nutzen kann, während sie gleichzeitig durch Umgebungsgeräusche (Straßenverkehr, Live-Konzerte, ...) beeinträchtigt wird. Außerdem wird ein Mikrofon benötigt - ein weiteres Bauteil, das der Kontaktlinse hinzugefügt wird.

Stereoskopische Ansicht - nicht nur für ein Auge

Es kann irritierend sein, nur eine Kontaktlinse zu haben, die Augmented Content zu Ihrer Ansicht hinzufügt, besonders wenn Sie Objekte der realen Welt augmentieren. Das Einsetzen einer AR-Kontaktlinse in jedes Auge, um binokulares Sehen und eine stereoskopische Darstellung der erweiterten Inhalte zu ermöglichen, stellt jedoch noch größere und extremere Anforderungen an die Positionsgenauigkeit dieser Linsen. Die Winkeldrehung, die „Vergenz“ der Augen für eine Tiefenpositionsverschiebung von z. B. 1 m auf 2 m beträgt lediglich 0,9° pro Auge, was einer Verschiebung von etwa 0,2 mm bei einer Kontaktlinse entspricht.

Nun bieten selbst die so genannten „Retina-Displays“ aktueller Handys nicht mehr als 300-500 PPI (Pixel pro Zoll), obwohl es bereits erste kleine Displays mit +1.000 PPI gibt, und es gibt einige einfarbige Prototypen, die sogar bis zu 5.000-15.000 PPI erreichen. Geht man jedoch davon aus, dass ein Vollfarbdisplay mit 1.000 PPI bei einer Größe von z. B. 1 mm auf der Kontaktlinse eine Auflösung von lediglich 40x40 Pixeln hätte, und der Parallaxenfehler von 0,2 mm muss durch eine Verschiebung des Bildes um 9 Pixel kompensiert werden. Oder anders ausgedrückt: Jeder Fehler von nur 1 Pixel in einem solchen Gerät (oder entsprechend eine Verschiebung der Position der Kontaktlinse um 25 µm!) würde bereits zu einem Tiefenwahrnehmungsfehler von etwa 5 cm in 1 m Entfernung führen. Das bedeutet, dass jede Augmentation auf der realen Welt leicht wackeln und ihre Position verschieben kann. Nicht nur seitlich durch unwillkürliche Bewegungen der Kontaktlinse, sondern vor allem in der Tiefe.

Darüber hinaus ist für eine stereoskopische Ansicht die genaue Augenposition erforderlich und nicht nur die Drehung, entweder durch einen weiteren Trägheitssensor (in diesem Fall einen Beschleunigungsmesser) oder durch die oben erwähnte kamerabasierte Technologie zur Verfolgung der realen Welt.

Sicherheit zuerst

Natürlich gibt es auch Sicherheitsaspekte zu beachten: Eine AR-Linse für dunkle Umgebungen würde helfen, den Stromverbrauch und die Wärmeentwicklung zu minimieren. Aber was, wenn wir die Linsen draußen an einem normalen Tag verwenden möchten? Besonders bei klarem Himmel können weiße Oberflächen leicht eine Helligkeit von über 1000 cd/m² erreichen. Um darauf etwas Augmented Reality zu sehen, wäre ein ebenso leistungsstarker Bildschirm erforderlich. Übrigens, was passiert, wenn die Ausrüstung ausfällt und die volle Helligkeit direkt auf die Mitte deiner Netzhaut projiziert, während du in einem dunklen Raum bist und sich deine Augen bereits an die Dunkelheit angepasst haben? Denk daran, dass du deine Augen nicht schließen kannst, um dich zu schützen – die Kontaktlinse sitzt unter deinem Augenlid…

Zu guter Letzt: Was ist mit der Toxizität der verwendeten Materialien, der Elektronik oder sogar einer eingebetteten Batterie? Was ist mit den gängigen Problemen der verringerten Sauerstoffversorgung der Augen bei längerem Tragen, die selbst bei normalen Kontaktlinsen auftreten? Stell dir vor, du wachst nach einer langen Nacht mit deinen AR-Kontaktlinsen noch in den Augen auf. Unangenehm ist wahrscheinlich das geringste deiner Sorgen.

Anpassung deiner Linse

Da die erforderlichen Mikrolinsen präzise auf die physische Form und die Parameter deines Auges abgestimmt werden müssen, müssen sie auch die Korrektur der Sehschärfe sowie die genaue Form deiner Hornhaut berücksichtigen. Das bedeutet, dass jede AR-Kontaktlinse nach den individuellen Rezeptparametern jedes Trägers gefertigt werden muss, ähnlich wie bei einer Brillenverordnung. Ein weiteres Problem besteht darin, dass Menschen, die eine Korrekturbrille tragen (und dies auch weiterhin tun möchten), individuelle Kontaktlinsen benötigen, die sicherstellen, dass nur das Mikrolens-Display angepasst wird und die reguläre Sicht nicht beeinflusst oder korrigiert wird, da dies bereits durch die Brille erfolgt ist.

Langlebigkeit vs. Preis

Aufgrund der Miniaturisierung und der Menge an Technologie, die in AR-Linsen integriert werden muss, sowie der erforderlichen individuellen Anpassung wird es keine günstigen AR-Linsen geben. Wie lange verwendest du normalerweise eine herkömmliche Kontaktlinse, bevor du sie ersetzen musst? Ein paar Wochen? Monate? Wirst du bereit sein, mehrmals im Jahr neue AR-Kontaktlinsen zu kaufen?

Datenschutz / Sicherheit und all das

Und es wird die üblichen Bedenken hinsichtlich Datenschutz, Sicherheit und Ethik geben — insbesondere bei so kleinen und unauffälligen Geräten wie Kontaktlinsen. Viele Unternehmen und Institutionen setzen auf Datensicherheit und erlauben keine Kameras oder Aufnahmegeräte in ihren Räumlichkeiten. Wie wird das kontrolliert? Braucht man Augenuntersuchungen und eine verpflichtende Entfernung der Kontaktlinsen, bevor man solche Orte betritt? Besonders wenn die Linsen eine Art integrierte Bilderkennung für Augmented Content verwenden. Was ist mit elektromagnetischen Störungen in Flugzeugen usw.? Und wenn drahtlose Daten an externe Geräte übertragen werden, wie wird verhindert, dass diese Datenströme für böswillige Zwecke abgefangen werden? Oder umgekehrt: Was, wenn jemand diesen Datenstrom hackt und dir völlig andere Bilder sendet? Zum Beispiel ein weißes Bild mit maximaler Helligkeit oder extrem flackernde, epilepsieauslösende Bilder usw.

Das Unmögliche möglich machen? Vielleicht eines Tages

Viele dieser offenen Fragen können gelöst werden. Doch für die meisten der oben genannten Probleme gibt es derzeit keine geeigneten Antworten und tragfähigen Lösungen. Es ist gefährlich, jetzt mit dem Hype zu beginnen und das Blaue vom Himmel zu versprechen, wenn eine angemessene, funktionierende Lösung, die für die Nutzer akzeptabel ist, noch mindestens 5 bis 10 Jahre entfernt ist. Andererseits ist die Zeit von der ersten Forschung bis zu einem tragfähigen, kommerziellen Produkt im Allgemeinen lang — oft etwa 20 Jahre, selbst heutzutage. Jetzt wäre ein guter Zeitpunkt, sich mit der Grundlagenforschung einer solchen Technologie zu beschäftigen, da sie viel Potenzial hat.

Aber nicht in der Gegenwart.

Erwarte nicht, in naher Zukunft eine Kontaktlinse zu kaufen, auf der du 4K-Filme auf Netflix schauen oder die Namensschilder von Personen in einem Meeting anzeigen kannst. Oder während eines Gesprächs im Internet suchen oder sogar ein Buch lesen kannst. Investoren und insbesondere die Medien können leicht von irreführenden und absichtlich bearbeiteten Marketingvideos sowie von falschen Darstellungen physikalischer Fakten geblendet werden. Daher ist es entscheidend, die Probleme, die eine solche Technologie mit sich bringt, sorgfältig zu durchdenken. Wir sehen Möglichkeiten, Seheinschränkungen über die üblichen Funktionen herkömmlicher Kontaktlinsen hinaus zu verbessern, sowie zusätzliche medizinische Sensoreingaben. Diese werden höchstwahrscheinlich die ersten brauchbaren Geräte sein — im Gegensatz zu einer vollständig augmentierten, hochauflösenden AR-Linse, die keine externen Geräte benötigt.

Möchtest du mehr zum Thema AR Linsen erfahren?

Zugehörige Artikel

“Some background info about Mojo Vision, and their envisioned technology.”